Traditional NLP vs Large Language Models

October 10, 2024 | By Diana Chen, Data Analyst

The Evolution of Natural Language Processing

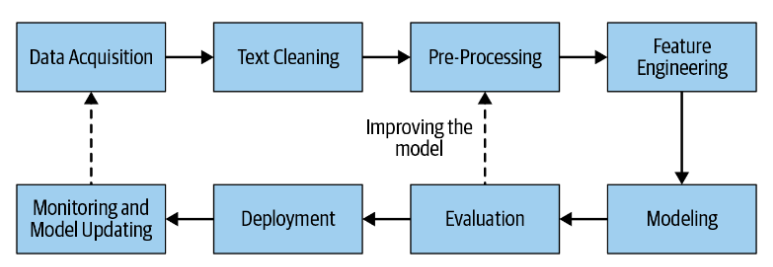

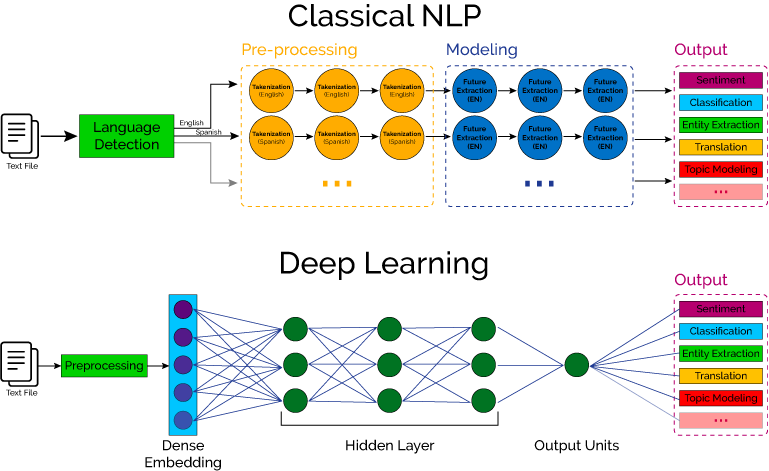

The field of Natural Language Processing (NLP) stands at a pivotal moment. Traditional approaches, which have served as the backbone of text analysis for decades, are now being challenged by the emergence of Large Language Models (LLMs). This shift represents not just a technical evolution, but a fundamental change in how we approach language understanding and processing.

Traditional NLP: Foundation and Strengths

Traditional NLP tools like NLTK and spaCy operate on well-defined linguistic principles and statistical models. These frameworks excel in:

- Precise tokenization and parsing

- Rule-based pattern matching

- Deterministic outcomes

- Resource efficiency

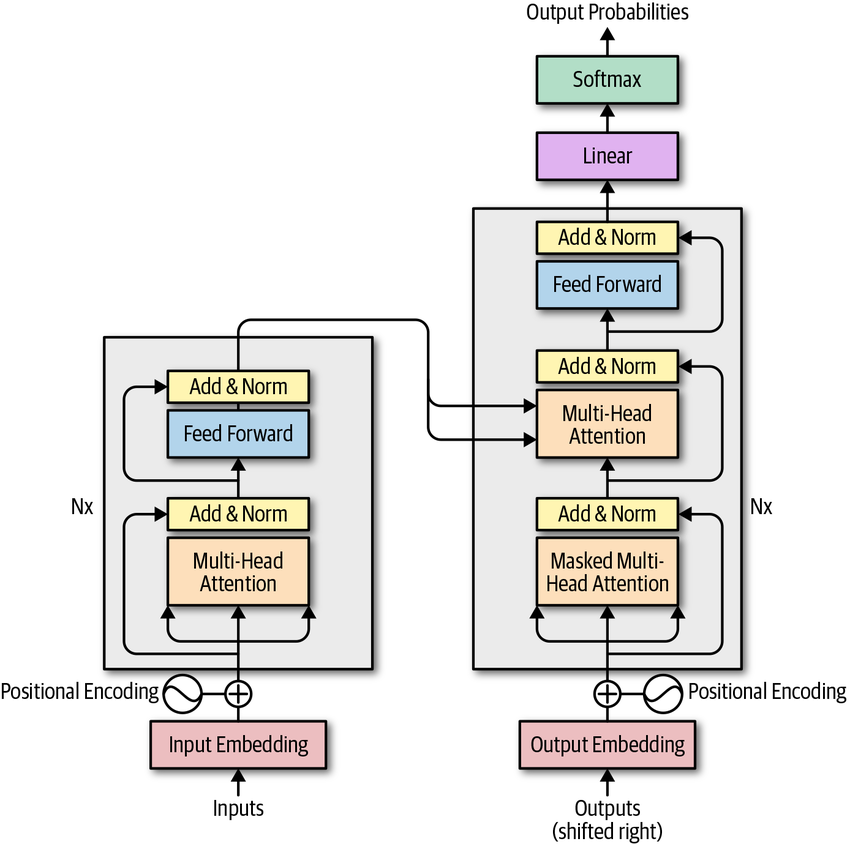

The LLM Revolution

Large Language Models like GPT and BERT have introduced a paradigm shift in NLP. Instead of explicit rules, these models learn language patterns from vast amounts of data, offering:

- Contextual understanding

- Natural language generation

- Transfer learning capabilities

- Adaptive language processing

Performance Analysis

Our team conducted extensive benchmarking of both approaches across various tasks:

Traditional NLP Advantages

- Predictable resource usage

- Lower computational requirements

- Faster processing for simple tasks

- Better explainability

LLM Advantages

- Superior context understanding

- Better handling of ambiguity

- More natural outputs

- Broader language coverage

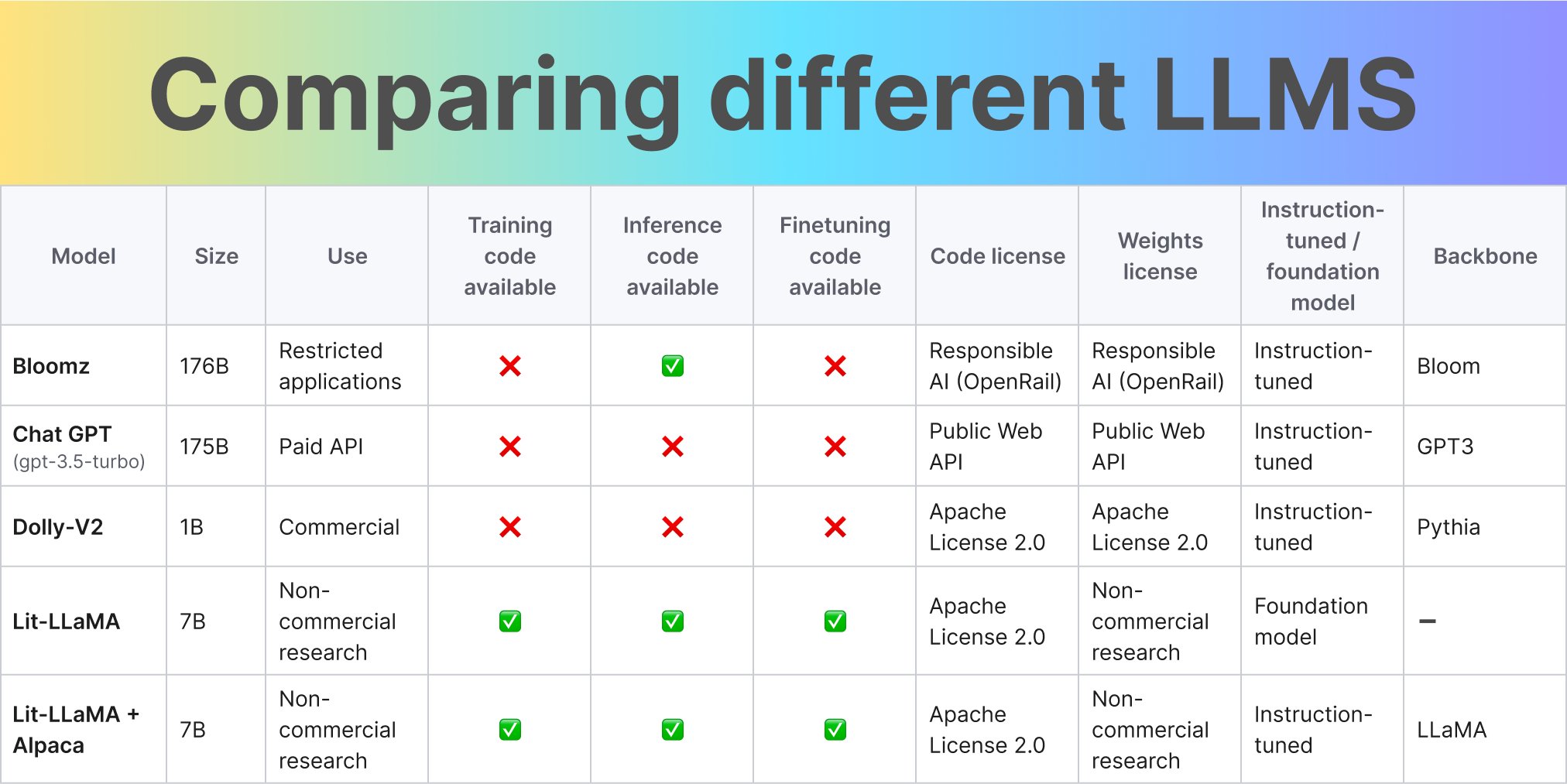

Cost and Resource Implications

The choice between traditional NLP and LLMs often comes down to practical considerations. Traditional tools typically involve one-time implementation costs and minimal computational resources. In contrast, LLMs require significant computational power and often come with ongoing API costs. Processing 1 million tokens might cost a few dollars with traditional tools but could run into hundreds with LLM APIs.

Choosing the Right Approach

The decision between traditional NLP and LLMs should be based on specific use cases:

Use Traditional NLP for:

- Well-defined, rule-based tasks

- Projects with limited computational resources

- Applications requiring deterministic outputs

- Real-time processing requirements

Use LLMs for:

- Complex language understanding tasks

- Natural language generation

- Projects requiring contextual awareness

- Applications handling diverse language patterns

Conclusion

The future of NLP likely lies in hybrid approaches that leverage the strengths of both traditional methods and LLMs. Understanding the tradeoffs between these approaches enables developers to make informed decisions based on their specific requirements, resources, and use cases.